I wanted to start putting in some test configurations for a future project based on Tomcat 9.

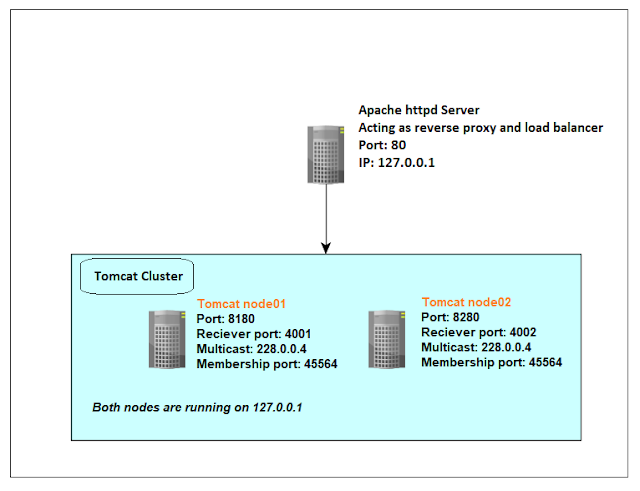

Needed to setup a test environment that runs on 1 VM, that is just to test my Apache and Tomcat clustering configuration, the test setup looks like the below:

The setup is going to have 2 tomcat 9 nodes with clustering enabled, this should allow sessions to be replicated between the 2 nodes, session persistence is not configured yet.

In order to be able to effectively use the cluster, we need to also have a load balancer to proxy the requests to the cluster nodes, I have choose to use Apache for this project.

Tomcat 9 can be downloaded from the official Tomcat website: https://tomcat.apache.org/download-90.cgi.

Once downloaded, we just need to unzip the tomcat installation in to a directory named node01 and copy that into another directory named node02.

We then need to modify the Tomcat server.xml so that the 2 nodes would use different ports since they run on the same network, this can be done by modifying the connector element and the shutdown port in the server element:

<Server port="8105" shutdown="SHUTDOWN">

.....

<Connector port="8180" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

Then we need to add the below default cluster configuration, it is essentially copied from the tomcat documentation, though we still need to modify the receiver port since we are running on the same machine:

<Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true">

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="0.0.0.0"

port="4001"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatchInterceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

In this case I placed the cluster configuration under host element so that the deployer doesn't cause a warning in Tomat start up, as deployment is specific to a host not to the whole engine.

You can find the whole server.xml file in this URL: https://github.com/sherif-abdelfattah/ExSession_old/blob/master/server.xml.

Note that I included the JvmRouteBinderValve valve in the configuration, this is needed to be able to recover from failed session stickiness configuration, the cluster would just rewrite the JSESSIONID cookie and continue to serve the same session from the node that the request landed on.

Once we configure the 2 nodes, we then start them up and we can see the below messages in the Tomcat catalina.out:

node01 output when it starts to join node02:

16-Aug-2020 19:37:34.751 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler ["http-nio-8180"]

16-Aug-2020 19:37:34.768 INFO [main] org.apache.catalina.startup.Catalina.load Server initialization in [431] milliseconds

16-Aug-2020 19:37:34.804 INFO [main] org.apache.catalina.core.StandardService.startInternal Starting service [Catalina]

16-Aug-2020 19:37:34.804 INFO [main] org.apache.catalina.core.StandardEngine.startInternal Starting Servlet engine: [Apache Tomcat/9.0.37]

16-Aug-2020 19:37:34.812 INFO [main] org.apache.catalina.ha.tcp.SimpleTcpCluster.startInternal Cluster is about to start

16-Aug-2020 19:37:34.818 INFO [main] org.apache.catalina.tribes.transport.ReceiverBase.bind Receiver Server Socket bound to:[/0.0.0.0:4001]

16-Aug-2020 19:37:34.829 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.setupSocket Setting cluster mcast soTimeout to [500]

16-Aug-2020 19:37:34.830 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.waitForMembers Sleeping for [1000] milliseconds to establish cluster membership, start level:[4]

16-Aug-2020 19:37:35.221 INFO [Membership-MemberAdded.] org.apache.catalina.ha.tcp.SimpleTcpCluster.memberAdded Replication member added:[org.apache.catalina.tribes.membership.MemberImpl[tcp://{0, 0, 0, 0}:4002,{0, 0, 0, 0},4002, alive=3358655, securePort=-1, UDP Port=-1, id={-56 72 63 -39 -27 123 70 86 -109 22 97 122 50 -99 0 24 }, payload={}, command={}, domain={}]]

16-Aug-2020 19:37:35.832 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.waitForMembers Done sleeping, membership established, start level:[4]

16-Aug-2020 19:37:35.836 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.waitForMembers Sleeping for [1000] milliseconds to establish cluster membership, start level:[8]

16-Aug-2020 19:37:35.842 INFO [Tribes-Task-Receiver[localhost-Channel]-1] org.apache.catalina.tribes.io.BufferPool.getBufferPool Created a buffer pool with max size:[104857600] bytes of type: [org.apache.catalina.tribes.io.BufferPool15Impl]

16-Aug-2020 19:37:36.837 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.waitForMembers Done sleeping, membership established, start level:[8]

16-Aug-2020 19:37:36.842 INFO [main] org.apache.catalina.ha.deploy.FarmWarDeployer.start Cluster FarmWarDeployer started.

16-Aug-2020 19:37:36.849 INFO [main] org.apache.catalina.ha.session.JvmRouteBinderValve.startInternal JvmRouteBinderValve started

16-Aug-2020 19:37:36.855 INFO [main] org.apache.catalina.startup.HostConfig.deployWAR Deploying web application archive [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ExSession.war]

16-Aug-2020 19:37:37.000 INFO [main] org.apache.catalina.ha.session.DeltaManager.startInternal Register manager [/ExSession] to cluster element [Host] with name [localhost]

16-Aug-2020 19:37:37.000 INFO [main] org.apache.catalina.ha.session.DeltaManager.startInternal Starting clustering manager at [/ExSession]

16-Aug-2020 19:37:37.005 INFO [main] org.apache.catalina.ha.session.DeltaManager.getAllClusterSessions Manager [/ExSession], requesting session state from [org.apache.catalina.tribes.membership.MemberImpl[tcp://{0, 0, 0, 0}:4002,{0, 0, 0, 0},4002, alive=3360160, securePort=-1, UDP Port=-1, id={-56 72 63 -39 -27 123 70 86 -109 22 97 122 50 -99 0 24 }, payload={}, command={}, domain={}]]. This operation will timeout if no session state has been received within [60] seconds.

16-Aug-2020 19:37:37.121 INFO [main] org.apache.catalina.ha.session.DeltaManager.waitForSendAllSessions Manager [/ExSession]; session state sent at [8/16/20, 7:37 PM] received in [105] ms.

16-Aug-2020 19:37:37.160 INFO [main] org.apache.catalina.startup.HostConfig.deployWAR Deployment of web application archive [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ExSession.war] has finished in [305] ms

16-Aug-2020 19:37:37.161 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ROOT]

16-Aug-2020 19:37:37.185 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ROOT] has finished in [24] ms

16-Aug-2020 19:37:37.185 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/docs]

16-Aug-2020 19:37:37.199 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/docs] has finished in [14] ms

16-Aug-2020 19:37:37.200 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/examples]

16-Aug-2020 19:37:37.393 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/examples] has finished in [193] ms

16-Aug-2020 19:37:37.393 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/host-manager]

16-Aug-2020 19:37:37.409 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/host-manager] has finished in [16] ms

16-Aug-2020 19:37:37.409 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/manager]

16-Aug-2020 19:37:37.422 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/manager] has finished in [13] ms

16-Aug-2020 19:37:37.422 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ExSession_exploded]

16-Aug-2020 19:37:37.435 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ExSession_exploded] has finished in [12] ms

16-Aug-2020 19:37:37.436 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["http-nio-8180"]

16-Aug-2020 19:37:37.445 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in [2676] milliseconds

These logs show that the cluster has started and that our node01 joined node02 which is listing on the receiver port 4002.

Next we need to configure Apache as a load balancer, since we have a running cluster with session replication, we have 2 options, either to setup session stickiness or not to set it up and let the Tomcat cluster handle sessions through session replication.

The Apache load balancer configuration in both cases would look like this:

# Apache Reverse proxy loadbalancer with session stickiness set to use JSESSIONID

<Proxy balancer://mycluster>

BalancerMember http://127.0.0.1:8180 route=node01

BalancerMember http://127.0.0.1:8280 route=node02

</Proxy>

ProxyPass /ExSession balancer://mycluster/ExSession stickysession=JSESSIONID|jsessionid

ProxyPassReverse /ExSession balancer://mycluster/ExSession stickysession=JSESSIONID|jsessionid

# Apache Reverse proxy loadbalancer with no session stickiness

#<Proxy "balancer://mycluster">

# BalancerMember "http://127.0.0.1:8180"

# BalancerMember "http://127.0.0.1:8280"

#</Proxy>

#ProxyPass "/ExSession" "balancer://mycluster/ExSession"

#ProxyPassReverse "/ExSession" "balancer://mycluster/ExSession"

I have commented out the config without session stickiness, and used the one with sessions stickiness to test what would happen if I deploy an application and shutdown the node I am sticky to it and see if my session will be replicated or not.

For testing this, I have wrote a small servlet based on the Tomcat servlet examples, it can be found in this URL: https://github.com/sherif-abdelfattah/ExSession_old/blob/master/ExSession.war.

One thing about the ExSession servlet application, it needs to have its sessions implementing the Java Serializable Interface so that Tomcat session managers can handle them, and the application must be marked with the <distributable/> tag in the application web.xml file.

Those are preconditions for application to be able to work with Tomcat cluster.

Once Apache is up and running we can see it is now able to display our servlet page directly from localhost on port 80:

Now take a note of our JSESSIONID cookie value, we are now served from node02, and the ID ends in CF07, the session is created on Aug. 16th and 20:19:55.

Now lets stop Tomcat node02 and refresh the browser page:

Now it can be seen that Tomcat failed over our session to node01, the session still have same time stamp and same ID, but it is served from node01.

This proves that session replication works as expected.