Ops Daily

Published by Sherif Abdelfattah. A DevOps engineer; personal blog, to help solve day to day problems and help document and share solutions and hints for DevOps professionals

Sunday, 18 April 2021

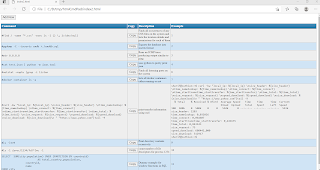

HTML5, CSS + Javascript to create a command memo pad

Looked for a tool that allows me to record the command text, a description and probably an example for how to use it and allows to copy the command with a single click, but couldn't find such tool

Thus I decided to build one, and I started to use an html table styler from devtable.com: https://divtable.com/table-styler/ to build the table style, did some changes on that and then used some HTML5 and Javascript to implement my needed functionality.

HTML5 introduced a new attribute contentEditable which helped me build the whole thing without using a backend.

Using just HTML5 and some Javascript allows to edit the html file content and manipulate it on the browser, you can modify and element with the attaribute contentEditable set to true.

Elements nested inside a top level element, say a table with contentEditable set to true are all editable too, unless you set the attribute to false on a child element.

To save those changes, we just need to save the html file using browser save as from the file menu, this will ensure all the changes are saved on disk.

This allows a very basic functionalty to work offline without use of a backend.

This can offcourse be extended to have backend code, but would need some server side scripting language to help persist the informantion and render the HTML and more time.

The code for the html page and its style is found in this link: https://github.com/sherif-abdelfattah/htmlcmdpad.

This is how the htmlcmdpad looks like: One major limitation for improving this idea as a front end only tool, is the fact that browser based Javascript doesn't have direct access to disk due to security reasons.

Saturday, 27 February 2021

How to show file/Directory copy progess on Linux

The copy was progressing very slowly, it took more than 3 hours to finish, the problem is, the standard linux cp command does not allow you to see the copy progress.

Thus, I was curious how to copy files and still show some progress statics, and I found out there are multiple ways to do it, but most will require additional installation requirements on your system.

Lets take a look at the options to do this.

Using gcp

gcp is an enhanced file copier, allows better options than cp, among those, it detects if the files being copied exists and would print a warning.

gcp will print the progress and the copy speed in MB/Sec, the size of the file copied and the time taken to copy.

sherif@Luthien:~/Downloads$ gcp -f datafari.deb datafari.deb_

Copying 640.06 MiB 100% |################################################| 213.51 MB/s Time: 0:00:03

sherif@Luthien:~/Downloads$

Using progress

progress is a little utility that can monitor the progress and throughput of programs doing IO, like cp, or a web browser.

Like gcp, progress needs to be installed as it is not a part of the standard installation.

One trick about progress is that is uses an interactive terminal to show the progress and throughput information, thus, in order to save the progress while copying multiple files, we can use the tee command.

tee would then preserve the standard output of progress, and would save a copy in a file.

To show the file, we need to encode none printables, using cat -e

sherif@Luthien:~$ cp -r ./Downloads ./Download_ & progress -mp $! |tee test

[1] 1506

[1]+ Done cp -r ./Downloads ./Download_

sherif@Luthien:~$

sherif@Luthien:~$ cat -e test

^[[?1049h^[[22;0;0t^[[1;37r^[(B^[[m^[[4l^[[?7h^[[H^[[2JNo PID(s) currently monitored^M$

^[[H^[[2JNo PID(s) currently monitored^M$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/solr-8.3.0.zip^[[2;9H53.9% (96.2 MiB / 178.6 MiB) 79.4 MiB/s remaining 0:00:01^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/solr-8.3.0.zip^[[2;9H91.8% (164 MiB / 178.6 MiB) 73.5 MiB/s^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/solr-8.3.0.zip^[[2;9H91.8% (164 MiB / 178.6 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/enterprise-search-0.1.0-beta3.tar.gz^[[2;9H12.2% (20.2 MiB / 165.8 MiB) 53.3 MiB/s remaining 0:00:02^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/enterprise-search-0.1.0-beta3.tar.gz^[[2;9H74.0% (122.8 MiB / 165.8 MiB) 65.6 MiB/s^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/enterprise-search-0.1.0-beta3.tar.gz^[[2;9H74.0% (122.8 MiB / 165.8 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/datafari.deb^[[2;9H68.4% (437.5 MiB / 640.1 MiB) 103.8 MiB/s remaining 0:00:01^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/datafari.deb^[[2;9H68.4% (437.5 MiB / 640.1 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/keycloak-8.0.2.zip^[[2;9H57.5% (131.2 MiB / 228.4 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/wso2is-5.9.0.zip^[[2;9H50.9% (189 MiB / 371.2 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/OpenJDK8U-jdk_x64_linux_hotspot_8u232b09.tar.gz^[[2;9H67.5% (67.2 MiB / 99.7 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/OpenJDK11U-jdk_x64_linux_hotspot_11.0.5_10.tar.gz^[[2;9H99.2% (186.2 MiB / 187.8 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/Anaconda3-2020.07-Linux-x86_64.sh^[[2;9H15.9% (87.5 MiB / 550.1 MiB) 100.9 MiB/s remaining 0:00:04^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/Anaconda3-2020.07-Linux-x86_64.sh^[[2;9H31.2% (171.8 MiB / 550.1 MiB) 98.5 MiB/s remaining 0:00:03^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/Anaconda3-2020.07-Linux-x86_64.sh^[[2;9H41.1% (226.2 MiB / 550.1 MiB) 93.0 MiB/s remaining 0:00:03^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/Anaconda3-2020.07-Linux-x86_64.sh^[[2;9H48.0% (264.2 MiB / 550.1 MiB) 86.9 MiB/s remaining 0:00:03^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/Anaconda3-2020.07-Linux-x86_64.sh^[[2;9H85.2% (468.5 MiB / 550.1 MiB) 98.6 MiB/s^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/Anaconda3-2020.07-Linux-x86_64.sh^[[2;9H85.2% (468.8 MiB / 550.1 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/eclipse-installer/plugins/org.eclipse.justj.openjdk.hotspot.jre.minimal.stripped_14.0.2.v20200815-0932.jar^[[2;9H0.0% (0 / 18.9 KiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/eclipse-installer/configuration/org.eclipse.osgi/154/0/.cp/libswt-cairo-gtk-4936r26.so^[[2;9H0.0% (0 / 42.9 KiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/chrome-linux.zip^[[2;9H65.5% (73.8 MiB / 112.6 MiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/redash-setup/redash-master/client/app/assets/less/inc/growl.less^[[2;9H0.0% (0 / 476 B)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/redash-setup/redash-master/redash/query_runner/query_results.py^[[2;9H0.0% (0 / 5.3 KiB)^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/fess-13.4.2.zip^[[2;9H40.9% (58 MiB / 141.8 MiB) 94.5 MiB/s^M$

$

^[[H^[[2J[ 1506] cp /home/sherif/Downloads/fess-13.4.2.zip^[[2;9H40.9% (58 MiB / 141.8 MiB)^M$

$

^[[H^[[2JNo such pid: 1506, or wrong permissions.^M$

^[[37;1H^[[?1049l^[[23;0;0t^M^[[?1l^[>sherif@Luthien:~$

Using pv

pv is a filter tool similar to cat, that monitors the progress of data through a pipe.

pv can be handy to print progress and rate information for any data transfer that goes through a pipe, eg: if the data is being sent using netcat or the like

Like the above tools, pv needs to be installed by root, as it is not part of the standard installation.

sherif@Luthien:~/Downloads$ pv datafari.deb >datafari.deb_

640MiB 0:00:00 [ 946MiB/s] [=======================================================>] 100%

sherif@Luthien:~/Downloads$

Using rsync

rsync is the Swiss army knife when it comes to copying files between 2 hosts.

It is able able to do local copy and could offer a huge amount of options spcially while doing backups which can speed up the process.

rsync is installed by default in most linux systems and thus, it is probably the easies way to look for progress information while tools like progress can't be installed on the system.

I would recommend reading through the rsync options and putting it in mind when doing large file copies or backups.

sherif@Luthien:~/Downloads$ rsync --progress datafari.deb datafari.deb_

datafari.deb

671,153,350 100% 113.72MB/s 0:00:05 (xfr#1, to-chk=0/1)

sherif@Luthien:~/Downloads$ man rsync

sherif@Luthien:~/Downloads$ rsync --progress -v datafari.deb datafari.deb_

datafari.deb

671,153,350 100% 166.59MB/s 0:00:03 (xfr#1, to-chk=0/1)

sent 671,317,285 bytes received 35 bytes 149,181,626.67 bytes/sec

total size is 671,153,350 speedup is 1.00

sherif@Luthien:~/Downloads$ rsync --progress -vv datafari.deb datafari.deb_

delta-transmission disabled for local transfer or --whole-file

datafari.deb

671,153,350 100% 255.09MB/s 0:00:02 (xfr#1, to-chk=0/1)

total: matches=0 hash_hits=0 false_alarms=0 data=671153350

sent 671,317,285 bytes received 102 bytes 268,526,954.80 bytes/sec

total size is 671,153,350 speedup is 1.00

sherif@Luthien:~/Downloads$

Sunday, 13 September 2020

Using Docker registry

Application build process can include a step for creating and building a docker image for the target app.

Docker images then needs to be pushed to a docker registry, so that the deployment step can use that image to deploy the application.

In order for the pipeline to work, one needs a docker registry to host the images, either local or public.

Docker hub offers a cloud hosted registry, which is very flexible and always available, but requires your systems to have internet access.

I tested using Docker hub registry and building a simple local registry.

Running a local docker registry is simple, its just another docker container.

sherif@Luthien:~$ cat docker_registry.sh docker run -d \ -p 5100:5000 \ --restart=always \ --name luthien_registry \ -v /registry:/var/lib/registry \ registry:2In the above script, I am mapping the default registry port 5000 on the container to port 5100 on the host machine.

To verify if the registry is running, we use the docker ps or docker container ps command to check:

sherif@Luthien:~$ docker container ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 26009ca9e4f4 registry:2 "/entrypoint.sh /etc…" About a minute ago Up About a minute 0.0.0.0:5100->5000/tcp luthien_registry sherif@Luthien:~$Next we need to build a test image to test the new registry, to do this we use a docker file:

sherif@Luthien:~$ cat Dockerfile FROM busybox CMD echo "Hello world! This is my first Docker image." sherif@Luthien:~$Then we use docker build to create the image locally:

sherif@Luthien:~$ docker build -f ./Dockerfile -t busybox_hello:1.0 ./ Sending build context to Docker daemon 3.432GB Step 1/2 : FROM busybox ---> 6858809bf669 Step 2/2 : CMD echo "Hello world! This is my first Docker image." ---> Running in 3a7a187ab5ba Removing intermediate container 3a7a187ab5ba ---> 63213a968c8e Successfully built 63213a968c8e Successfully tagged busybox_hello:1.0 sherif@Luthien:~$ docker image ls -a REPOSITORY TAG IMAGE ID CREATED SIZE busybox_hello 1.0 7415dea3e476 22 seconds ago 1.23MB busybox latest 6858809bf669 3 days ago 1.23MB sherif@Luthien:~$Then we tag the local image using the name:port/tag:version of the image to be pushed to the registry.

sherif@Luthien:~$ docker tag busybox_hello:1.0 luthien:5100/busybox_hello:1.0 sherif@Luthien:~$ docker image ls -a REPOSITORY TAG IMAGE ID CREATED SIZE busybox_hello 1.0 7415dea3e476 23 minutes ago 1.23MB luthien:5100/busybox_hello 1.0 7415dea3e476 23 minutes ago 1.23MB busybox latest 6858809bf669 3 days ago 1.23MB sherif@Luthien:~$Then we push the locally tagged image to the registry using docker push:

sherif@Luthien:~$ docker push luthien:5100/busybox_hello:1.0 The push refers to repository [luthien:5100/busybox_hello] be8b8b42328a: Pushed 1.0: digest: sha256:d7c348330e06aa13c1dc766590aebe0d75e95291993dd26710b6bbdd671b30d1 size: 527 sherif@Luthien:~$In order to confirm if the image was pushed, we use the docker registry rest API to query for our new image:

sherif@Luthien:~$ curl -LX GET http://luthien:5100/v2/_catalog

{"repositories":["busybox_hello"]}

sherif@Luthien:~$ curl -LX GET http://luthien:5100/v2/busybox_hello/tags/list

{"name":"busybox_hello","tags":["1.0"]}

sherif@Luthien:~$

If we want to push to an exsiting docker hub repository, we follow a simpler process.

We just need to build the image with the repository tag, do a docker login then do a docker push.

This should push the image as a version tag into the existing repository.

sherif@Luthien:~$ docker build -t sfattah/sfattah_r:1.0 ./ sherif@Luthien:~$ docker login Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one. Username: sfattah Password: Login Succeeded sherif@Luthien:~$ docker push sfattah/sfattah_r:1.0 The push refers to repository [docker.io/sfattah/sfattah_r] be8b8b42328a: Mounted from library/busybox 1.0: digest: sha256:d7c348330e06aa13c1dc766590aebe0d75e95291993dd26710b6bbdd671b30d1 size: 527 sherif@Luthien:~$

Sunday, 23 August 2020

Linux LVS with docker containers

LVS is a mechanism implemented by Linux kernel to do layer-4 (transport layer) switching, mainly to achieve load distribution for high availability purposes.

LVS is managed using a command line tool: ipvmadm

I used docker containers running apache 2.4 to test the concept on a Virtual box VM.

First, I needed to install docker containers, and create docker volums to store the apache configuration and the content outside the containers:

root@fingolfin:~# docker volume create httpdcfg1

httpdcfg1

root@fingolfin:~# docker volume create httpdcfg2

httpdcfg2

root@fingolfin:~# docker volume create www2

www2

root@fingolfin:~# docker volume create www1

www1

root@fingolfin:~#

Once the volumes are created, we can start the containers:

root@fingolfin:~# docker run -d --name httpd1 -p 8180:80 -v www1:/usr/local/apache2/htdocs/ -v httpdcfg1:/usr/local/apache2/conf/ httpd:2.4

a6e11431a228498b8fc412dfcee6b0fc682ce241e79527fdf33e7ceb1945e54a

root@fingolfin:~#

root@fingolfin:~# docker run -d --name httpd2 -p 8280:80 -v www2:/usr/local/apache2/htdocs/ -v httpdcfg2:/usr/local/apache2/conf/ httpd:2.4

b40e29e187b0841d81b345ca975cd867bcce587be8b3f79e43a2ec0d1087aba8

root@fingolfin:~#

root@fingolfin:~# docker container ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a665073770ec httpd:2.4 "httpd-foreground" 41 minutes ago Up 41 minutes 0.0.0.0:8280->80/tcp httpd2

d1dc596f68a6 httpd:2.4 "httpd-foreground" 54 minutes ago Up 45 minutes 0.0.0.0:8180->80/tcp httpd1

root@fingolfin:~#

Then we need to change the index.html on the volumes www1 and www2 to show different nodes.

This can be done by directly accessing the file from the docker volume mount point:

root@fingolfin:~# docker volume inspect www1

[

{

"CreatedAt": "2020-08-23T16:59:50+02:00",

"Driver": "local",

"Labels": {},

"Mountpoint": "/var/lib/docker/volumes/www1/_data",

"Name": "www1",

"Options": {},

"Scope": "local"

}

]

root@fingolfin:~# cd /var/lib/docker/volumes/www1/_data/

Next step is to obtain the docker container IP address using docker container inspect:

root@fingolfin:~# docker container inspect httpd1|grep '"IPAddress":'

"IPAddress": "172.17.0.2",

"IPAddress": "172.17.0.2",

root@fingolfin:~#

root@fingolfin:~# docker container inspect httpd2|grep '"IPAddress":'

"IPAddress": "172.17.0.3",

"IPAddress": "172.17.0.3",

root@fingolfin:~#

One more thing, we need to install ping inside the containers, just to make sure that we can troubleshoot network in case things didn't work.

To do this we use docker exec:

root@fingolfin:~# docker exec -it httpd1 bash

root@a6e11431a228:/usr/local/apache2#

root@a6e11431a228:/usr/local/apache2# apt update; apt install iputils-ping -y

Next we will create a subinterface IP address or as people call it a vip (virtual ip) on the main ethernet device of the docker host, will use a 10.0.2.0 network address, but it should be equally fine to use any other IP addess.

root@fingolfin:~# ifconfig enp0s3:1 10.0.2.200 netmask 255.255.255.0 broadcast 10.0.2.255

root@fingolfin:~#root@fingolfin:~# ip addr show dev enp0s3

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:7e:6e:97 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global dynamic noprefixroute enp0s3

valid_lft 74725sec preferred_lft 74725sec

inet 172.17.60.200/16 brd 172.17.255.255 scope global enp0s3:0

valid_lft forever preferred_lft forever

inet 10.0.2.200/24 brd 10.0.2.255 scope global secondary enp0s3:1

valid_lft forever preferred_lft forever

inet6 fe80::a790:c580:9be5:55ef/64 scope link noprefixroute

valid_lft forever preferred_lft forever

root@fingolfin:~#

We can then ping the IP of the host, 10.0.2.15 from within the docker container, to ensure container can reach that:

root@a6e11431a228:/usr/local/apache2# ping 10.0.2.15

PING 10.0.2.15 (10.0.2.15) 56(84) bytes of data.

64 bytes from 10.0.2.15: icmp_seq=1 ttl=64 time=0.114 ms

64 bytes from 10.0.2.15: icmp_seq=2 ttl=64 time=0.127 ms

^C

--- 10.0.2.15 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 17ms

rtt min/avg/max/mdev = 0.114/0.120/0.127/0.012 ms

root@a6e11431a228:/usr/local/apache2#

Then set up the Linux LVS from the host prompt:

root@fingolfin:~# ipvsadm -A -t 172.17.60.200:80 -s rr

root@fingolfin:~# ipvsadm -a -t 172.17.60.200:80 -r 172.17.0.3:80 -m

root@fingolfin:~# ipvsadm -a -t 172.17.60.200:80 -r 172.17.0.2:80 -m

root@fingolfin:~# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.0.2.200:80 rr

-> 172.17.0.2:80 Masq 1 0 0

-> 172.17.0.3:80 Masq 1 0 0

root@fingolfin:~#

Then to test the setup, we use curl from the host command line:

root@fingolfin:~# curl http://10.0.2.200

It works! node 1

root@fingolfin:~# curl http://10.0.2.200

It works! node 2

root@fingolfin:~# curl http://10.0.2.200

It works! node 1

root@fingolfin:~# curl http://10.0.2.200

It works! node 2

root@fingolfin:~#

root@fingolfin:~# ipvsadm -L -n --stats

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Conns InPkts OutPkts InBytes OutBytes

-> RemoteAddress:Port

TCP 10.0.2.200:80 4 24 16 1576 1972

-> 172.17.0.2:80 2 12 8 788 986

-> 172.17.0.3:80 2 12 8 788 986

root@fingolfin:~#

As can be seen, the requests are equally distributed between the 2 Apache containers.

Other loadbalancing algorithms can be used to suite the need of the use case using the -s (scheduler) option.

More information can be found in the Linux ipvsadm man page: https://linux.die.net/man/8/ipvsadm

Referances:

http://www.ultramonkey.org/papers/lvs_tutorial/html/

Sunday, 16 August 2020

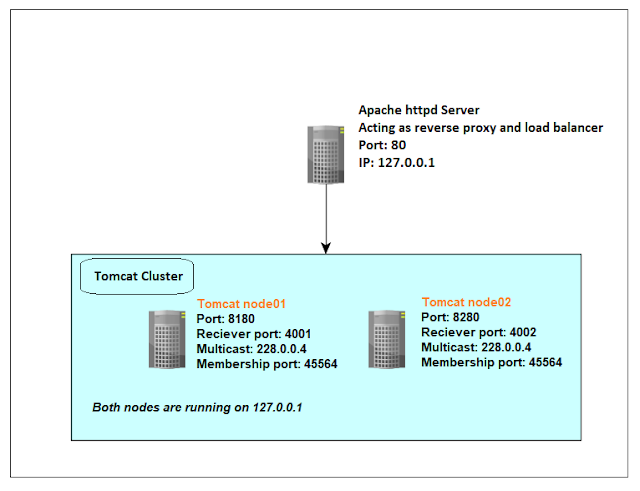

Tomcat Session Replication Clustering + Apache Load Balancer

I wanted to start putting in some test configurations for a future project based on Tomcat 9.

Needed to setup a test environment that runs on 1 VM, that is just to test my Apache and Tomcat clustering configuration, the test setup looks like the below:

The setup is going to have 2 tomcat 9 nodes with clustering enabled, this should allow sessions to be replicated between the 2 nodes, session persistence is not configured yet.

In order to be able to effectively use the cluster, we need to also have a load balancer to proxy the requests to the cluster nodes, I have choose to use Apache for this project.

Tomcat 9 can be downloaded from the official Tomcat website: https://tomcat.apache.org/download-90.cgi.

Once downloaded, we just need to unzip the tomcat installation in to a directory named node01 and copy that into another directory named node02.

We then need to modify the Tomcat server.xml so that the 2 nodes would use different ports since they run on the same network, this can be done by modifying the connector element and the shutdown port in the server element:

<Server port="8105" shutdown="SHUTDOWN">

.....

<Connector port="8180" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

Then we need to add the below default cluster configuration, it is essentially copied from the tomcat documentation, though we still need to modify the receiver port since we are running on the same machine:

<Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true">

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="0.0.0.0"

port="4001"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatchInterceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

In this case I placed the cluster configuration under host element so that the deployer doesn't cause a warning in Tomat start up, as deployment is specific to a host not to the whole engine.

You can find the whole server.xml file in this URL: https://github.com/sherif-abdelfattah/ExSession_old/blob/master/server.xml.

Note that I included the JvmRouteBinderValve valve in the configuration, this is needed to be able to recover from failed session stickiness configuration, the cluster would just rewrite the JSESSIONID cookie and continue to serve the same session from the node that the request landed on.

Once we configure the 2 nodes, we then start them up and we can see the below messages in the Tomcat catalina.out:

node01 output when it starts to join node02:

16-Aug-2020 19:37:34.751 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler ["http-nio-8180"]

16-Aug-2020 19:37:34.768 INFO [main] org.apache.catalina.startup.Catalina.load Server initialization in [431] milliseconds

16-Aug-2020 19:37:34.804 INFO [main] org.apache.catalina.core.StandardService.startInternal Starting service [Catalina]

16-Aug-2020 19:37:34.804 INFO [main] org.apache.catalina.core.StandardEngine.startInternal Starting Servlet engine: [Apache Tomcat/9.0.37]

16-Aug-2020 19:37:34.812 INFO [main] org.apache.catalina.ha.tcp.SimpleTcpCluster.startInternal Cluster is about to start

16-Aug-2020 19:37:34.818 INFO [main] org.apache.catalina.tribes.transport.ReceiverBase.bind Receiver Server Socket bound to:[/0.0.0.0:4001]

16-Aug-2020 19:37:34.829 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.setupSocket Setting cluster mcast soTimeout to [500]

16-Aug-2020 19:37:34.830 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.waitForMembers Sleeping for [1000] milliseconds to establish cluster membership, start level:[4]

16-Aug-2020 19:37:35.221 INFO [Membership-MemberAdded.] org.apache.catalina.ha.tcp.SimpleTcpCluster.memberAdded Replication member added:[org.apache.catalina.tribes.membership.MemberImpl[tcp://{0, 0, 0, 0}:4002,{0, 0, 0, 0},4002, alive=3358655, securePort=-1, UDP Port=-1, id={-56 72 63 -39 -27 123 70 86 -109 22 97 122 50 -99 0 24 }, payload={}, command={}, domain={}]]

16-Aug-2020 19:37:35.832 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.waitForMembers Done sleeping, membership established, start level:[4]

16-Aug-2020 19:37:35.836 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.waitForMembers Sleeping for [1000] milliseconds to establish cluster membership, start level:[8]

16-Aug-2020 19:37:35.842 INFO [Tribes-Task-Receiver[localhost-Channel]-1] org.apache.catalina.tribes.io.BufferPool.getBufferPool Created a buffer pool with max size:[104857600] bytes of type: [org.apache.catalina.tribes.io.BufferPool15Impl]

16-Aug-2020 19:37:36.837 INFO [main] org.apache.catalina.tribes.membership.McastServiceImpl.waitForMembers Done sleeping, membership established, start level:[8]

16-Aug-2020 19:37:36.842 INFO [main] org.apache.catalina.ha.deploy.FarmWarDeployer.start Cluster FarmWarDeployer started.

16-Aug-2020 19:37:36.849 INFO [main] org.apache.catalina.ha.session.JvmRouteBinderValve.startInternal JvmRouteBinderValve started

16-Aug-2020 19:37:36.855 INFO [main] org.apache.catalina.startup.HostConfig.deployWAR Deploying web application archive [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ExSession.war]

16-Aug-2020 19:37:37.000 INFO [main] org.apache.catalina.ha.session.DeltaManager.startInternal Register manager [/ExSession] to cluster element [Host] with name [localhost]

16-Aug-2020 19:37:37.000 INFO [main] org.apache.catalina.ha.session.DeltaManager.startInternal Starting clustering manager at [/ExSession]

16-Aug-2020 19:37:37.005 INFO [main] org.apache.catalina.ha.session.DeltaManager.getAllClusterSessions Manager [/ExSession], requesting session state from [org.apache.catalina.tribes.membership.MemberImpl[tcp://{0, 0, 0, 0}:4002,{0, 0, 0, 0},4002, alive=3360160, securePort=-1, UDP Port=-1, id={-56 72 63 -39 -27 123 70 86 -109 22 97 122 50 -99 0 24 }, payload={}, command={}, domain={}]]. This operation will timeout if no session state has been received within [60] seconds.

16-Aug-2020 19:37:37.121 INFO [main] org.apache.catalina.ha.session.DeltaManager.waitForSendAllSessions Manager [/ExSession]; session state sent at [8/16/20, 7:37 PM] received in [105] ms.

16-Aug-2020 19:37:37.160 INFO [main] org.apache.catalina.startup.HostConfig.deployWAR Deployment of web application archive [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ExSession.war] has finished in [305] ms

16-Aug-2020 19:37:37.161 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ROOT]

16-Aug-2020 19:37:37.185 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ROOT] has finished in [24] ms

16-Aug-2020 19:37:37.185 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/docs]

16-Aug-2020 19:37:37.199 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/docs] has finished in [14] ms

16-Aug-2020 19:37:37.200 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/examples]

16-Aug-2020 19:37:37.393 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/examples] has finished in [193] ms

16-Aug-2020 19:37:37.393 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/host-manager]

16-Aug-2020 19:37:37.409 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/host-manager] has finished in [16] ms

16-Aug-2020 19:37:37.409 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/manager]

16-Aug-2020 19:37:37.422 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/manager] has finished in [13] ms

16-Aug-2020 19:37:37.422 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ExSession_exploded]

16-Aug-2020 19:37:37.435 INFO [main] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/opt/tomcat_cluster/apache-tomcat-9.0.37_node1/webapps/ExSession_exploded] has finished in [12] ms

16-Aug-2020 19:37:37.436 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["http-nio-8180"]

16-Aug-2020 19:37:37.445 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in [2676] milliseconds

These logs show that the cluster has started and that our node01 joined node02 which is listing on the receiver port 4002.

Next we need to configure Apache as a load balancer, since we have a running cluster with session replication, we have 2 options, either to setup session stickiness or not to set it up and let the Tomcat cluster handle sessions through session replication.

The Apache load balancer configuration in both cases would look like this:

# Apache Reverse proxy loadbalancer with session stickiness set to use JSESSIONID

<Proxy balancer://mycluster>

BalancerMember http://127.0.0.1:8180 route=node01

BalancerMember http://127.0.0.1:8280 route=node02

</Proxy>

ProxyPass /ExSession balancer://mycluster/ExSession stickysession=JSESSIONID|jsessionid

ProxyPassReverse /ExSession balancer://mycluster/ExSession stickysession=JSESSIONID|jsessionid

# Apache Reverse proxy loadbalancer with no session stickiness

#<Proxy "balancer://mycluster">

# BalancerMember "http://127.0.0.1:8180"

# BalancerMember "http://127.0.0.1:8280"

#</Proxy>

#ProxyPass "/ExSession" "balancer://mycluster/ExSession"

#ProxyPassReverse "/ExSession" "balancer://mycluster/ExSession"

I have commented out the config without session stickiness, and used the one with sessions stickiness to test what would happen if I deploy an application and shutdown the node I am sticky to it and see if my session will be replicated or not.

For testing this, I have wrote a small servlet based on the Tomcat servlet examples, it can be found in this URL: https://github.com/sherif-abdelfattah/ExSession_old/blob/master/ExSession.war.

One thing about the ExSession servlet application, it needs to have its sessions implementing the Java Serializable Interface so that Tomcat session managers can handle them, and the application must be marked with the <distributable/> tag in the application web.xml file.

Those are preconditions for application to be able to work with Tomcat cluster.

Once Apache is up and running we can see it is now able to display our servlet page directly from localhost on port 80:

Now take a note of our JSESSIONID cookie value, we are now served from node02, and the ID ends in CF07, the session is created on Aug. 16th and 20:19:55.

Now lets stop Tomcat node02 and refresh the browser page:

Now it can be seen that Tomcat failed over our session to node01, the session still have same time stamp and same ID, but it is served from node01.

This proves that session replication works as expected.

Wednesday, 22 July 2020

Mircosoft SQL Server cheat sheet

I don't run into it everyday so I created this cheat sheet to help me remember how things are done the SQL Server way.

use jira881;

go

select * from sys.database_principals

-- List all the grants given to certain principal

use jira850;

go

SELECT pr.principal_id, pr.name as pinciple_name, pr.type_desc,

pr.authentication_type_desc, pe.state_desc, pe.permission_name

FROM sys.database_principals AS pr

JOIN sys.database_permissions AS pe

ON pe.grantee_principal_id = pr.principal_id;

-- List all users who own a database on the server

select suser_sname(owner_sid) as 'Owner', state_desc, *

from master.sys.databases

-- List all users defined server wide

select * from master.sys.server_principals

-- List all Server wide logins available and the login type:

select sp.name as login,

sp.type_desc as login_type,

sl.password_hash,

sp.create_date,

sp.modify_date,

case when sp.is_disabled = 1 then 'Disabled'

else 'Enabled' end as status

from sys.server_principals sp

left join sys.sql_logins sl

on sp.principal_id = sl.principal_id

where sp.type not in ('G', 'R')

order by sp.name;

-- list users in db_owner role for a certain database:

USE MyOptionsTest;

GO

SELECT members.name as 'members_name', roles.name as 'roles_name',roles.type_desc as 'roles_desc',members.type_desc as 'members_desc'

FROM sys.database_role_members rolemem

INNER JOIN sys.database_principals roles

ON rolemem.role_principal_id = roles.principal_id

INNER JOIN sys.database_principals members

ON rolemem.member_principal_id = members.principal_id

where roles.name = 'db_owner'

ORDER BY members.name

-- List SQL server dbrole vs database user mapping

USE MyOptionsTest;

GO

SELECT DP1.name AS DatabaseRoleName,

isnull (DP2.name, 'No members') AS DatabaseUserName

FROM sys.database_role_members AS DRM

RIGHT OUTER JOIN sys.database_principals AS DP1

ON DRM.role_principal_id = DP1.principal_id

LEFT OUTER JOIN sys.database_principals AS DP2

ON DRM.member_principal_id = DP2.principal_id

WHERE DP1.type = 'R'

ORDER BY DP1.name;

-- Create a server wide login

CREATE login koki

WITH password = 'Koki_123_123'

go

-- create a user mapped to a server wide login:

USE MyOptionsTest;

GO

CREATE USER koki_mot FOR LOGIN koki

go

USE MyOptionsTest;

GO

EXEC sp_addrolemember 'db_owner', 'koki_mot';

go

USE jira881;

GO

CREATE USER koki_881 FOR LOGIN koki;

go

EXEC sp_addrolemember 'db_owner', 'koki_881';

go

go

create database testdb1

collate Latin1_General_CI_AS

go

use master;

go

create database testdb_bin

collate SQL_Latin1_General_CP850_BIN2

go

-- list all the binary collations available on the server:

SELECT Name, Description FROM fn_helpcollations() WHERE Name like '%bin2%'

BACKUP DATABASE MyOptionsTest

TO DISK = '/tmp/MyOptionsTest.bak'

WITH FORMAT;

GO

-- restore a the backup to a different db

RESTORE DATABASE MOTest

FROM DISK = '/tmp/MyOptionsTest.bak'

WITH

MOVE 'MyOptionsTest' TO '/var/opt/mssql/data/MOTest.mdf',

MOVE 'MyOptionsTest_log' TO '/var/opt/mssql/data/MOTest_log.ldf'

use MyOptionsTest

go

delete from dbo.t1

select * from dbo.t1

-- restore backup to same database

use master;

go

RESTORE DATABASE MyOptionsTest

FROM DISK = '/tmp/MyOptionsTest.bak'

WITH REPLACE

GO

2024 mssql-scripter -S localhost -d jira850 -U koki

2028 mssql-scripter -S localhost -d jira881 -U koki --schema-and-data > ./jira881_mssql_scripter_out.sql

2029 ls -ltr

2030 vim jira881_mssql_scripter_out.sql

2031 mssql-scripter -S localhost -d jira881 -U koki --schema-and-data > ./jira881_mssql_scripter_out.sql

mssql-scripter is exceptionally useful, it allowed me to export the whole database in text format, which enables me to do text manipulations using Unix filters on the data, also allows the use of tools like diff and GUI diffuse to compare exports done after certain application operations.

Very useful tool.

Sunday, 19 July 2020

Configure Apache to increase protection against XSS and ClickJacking attacks.

One of the good tips to help minimized the reported issues and to avoid false positives, is to configure the application reverse proxy in the right way.

In this post, I will focus on Apache and how to add a simple configuration to mitigate multiple reported issues found by automated tools.

In this example, I am scanning a simple Java web form running behind Apache which acts as a reverse proxy in this case.

Below is the initial scan report:

- X-Frame-Options Header Not Set

- X-Content-Type-Options Header Missing

- Absence of Anti-CSRF Tokens

- Cookie Without SameSite Attribute

- Charset Mismatch (Header Versus Meta Charset) (informational finding)

Below is an example configuration for the domain http://172.17.0.1/:

# Set those headers to enable right CORS headers.

Header always set Access-Control-Allow-Origin "http://172.17.0.1"

Header always set Access-Control-Allow-Methods "POST, GET, OPTIONS, DELETE, PUT"

Header always set Access-Control-Max-Age "1000"

Header always set Access-Control-Allow-Headers "x-requested-with, Content-Type, origin, authorization, accept, client-security-token"

Header always set Access-Control-Expose-Headers "*"

# Added a rewrite to respond with a 200 SUCCESS on every OPTIONS request.

RewriteEngine On

RewriteCond %{REQUEST_METHOD} OPTIONS

RewriteRule ^(.*)$ $1 [R=200,L]

# Set x-frame-options to sameorigin to combat click-jacking.

Header always set X-Frame-Options SAMEORIGIN

# Set Samesite to strict to counter potential session cookie hijacking, also helps protect

# from CSRF though session cookie.

Header edit Set-Cookie ^(.*)$ $1;SameSite=strict

# Set X-Content-Type-Options to nosniff to avoid MIME sniffing.

Header always set X-Content-Type-Options nosniff

As you can see, the above rules address multiple issues reported in the scan, mainly to protect agains CORS problems,click-jacking, XSS though cookies and Anti-MIME sniffing.

Once that configuration is in effect, the number of reported issues is much less:

The last problem related to CSRF is an architectural problem in the application.

Modern web applications are encouraged to use tokens injected into the HTML pages and forms, dynamic generated content and on server side to validate that same user with same session is one who is submitting the request without being hijacked by some malicious attack.

Check https://en.wikipedia.org/wiki/Cross-site_request_forgery for more information.

Modsecurity does have rules that work by injecting content to help with CSRF, though, if applied without change, it could break the application functionality.